Artificial Intelligence in Sound Industry

Introduction

Artificial Intelligence In Sound Industry (AI) is no longer just a buzzword—it is transforming industries at a rapid pace. In the sound and audio industry, AI is creating smarter systems, automating sound mixing, improving live experiences, and even predicting audience preferences. But what exactly can we expect in the next five years? Let’s take a realistic look at how AI will shape the future of sound.

1. Smarter Live Sound Management

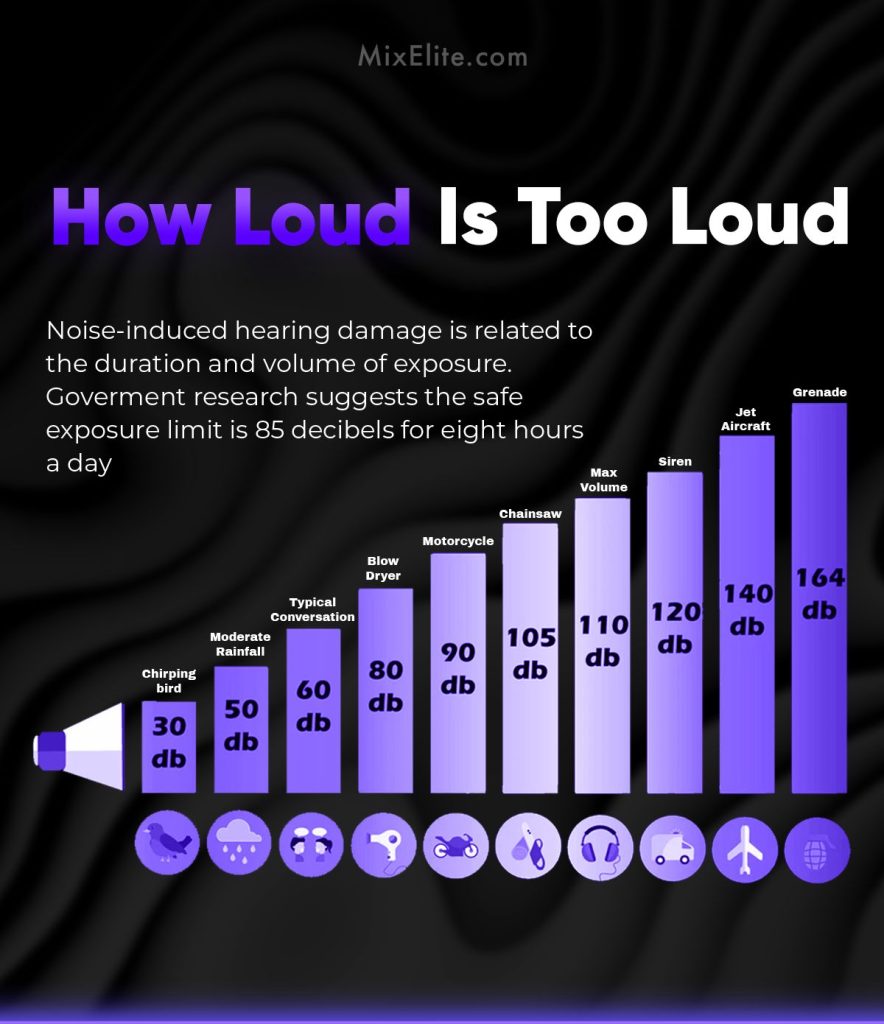

Traditionally, live sound engineers have manually adjusted levels, equalizers, and frequencies to ensure crystal-clear sound. In the next five years, AI-powered systems will be able to auto-adjust sound quality in real-time based on the size of the venue, crowd noise, and even weather conditions.

Example: AI tools can automatically balance a live concert where thousands of people are cheering without compromising the artist’s voice clarity.

2. AI-Driven Sound Personalization

AI will make personalized sound experiences possible. This means sound systems can adapt to individual listener preferences during live events. For instance, one person may prefer deeper bass, while another wants clearer vocals—AI-enabled headphones or apps could customize the experience instantly.

Example: At a wedding or cultural event, guests could connect to an app that adjusts audio according to their preferences.

3. Predictive Maintenance of Sound Equipment

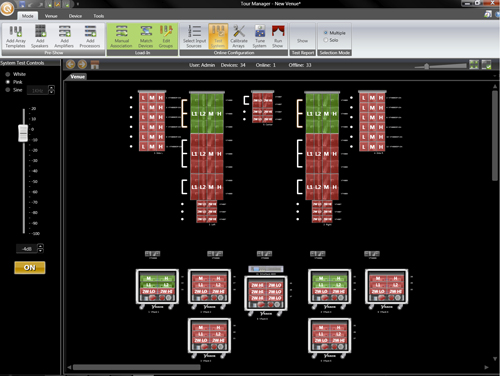

One major issue in the sound industry is equipment breakdown during crucial moments. AI will help predict when a speaker, microphone, or amplifier is likely to fail, allowing technicians to service it beforehand. This will reduce downtime and ensure seamless events.

Example: A concert organizer using AI systems can get alerts that a subwoofer needs repair before the show, avoiding last-minute issues.

4. AI in Music & Sound Creation

AI is already being used to generate music, but in the next five years, we’ll see it assisting sound designers, DJs, and event organizers in creating custom soundscapes. While AI won’t replace human creativity, it will act as a co-creator.

Example: AI could help generate background music for a conference session or create unique soundtracks for a cultural program.

5. Enhanced Audience Engagement

AI will also play a huge role in analyzing audience reactions during live shows. By using facial recognition, body movement sensors, and sound response data, AI can suggest changes to tempo, volume, or sound style that boost audience excitement.

Example: If AI detects the crowd isn’t reacting much during a concert, it can recommend switching to a more energetic track mix.

6. Accessibility & Inclusivity in Events

AI will make sound systems more inclusive for people with hearing difficulties. AI-driven systems will enable real-time transcription, sound enhancement, and customized hearing aid support.

Example: At conferences, AI tools can provide instant subtitles and translate speeches into multiple languages, ensuring no one feels left out.

7. Cost-Effective Solutions for Event Organizers

Over the next five years, AI is expected to reduce the cost of sound engineering and event audio setups by automating repetitive tasks. Organizers can get premium sound quality without hiring large technical teams.

Example: Smaller weddings or cultural programs will be able to enjoy professional-grade sound systems without overspending.

Conclusion

The future of AI in the sound industry is exciting, practical, and full of opportunities. From smarter live sound management to predictive maintenance and personalized audio experiences, AI will revolutionize how we experience sound in events, concerts, and everyday life.

At SoundKraft, we believe in embracing the latest technology while keeping the human touch alive. The next five years will not replace sound engineers but empower them with tools that deliver unforgettable sound experiences.